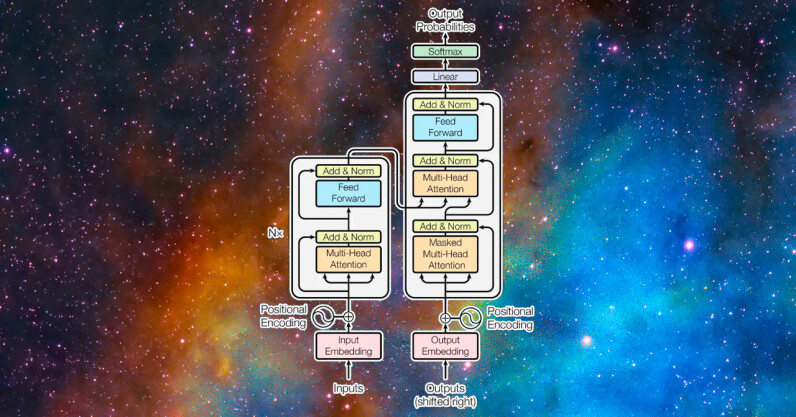

This article is part of Demystifying AI, a series of posts that (try to) disambiguate the jargon and myths surrounding AI. (In partnership with Paperspace) In recent years, the transformer model has become one of the main highlights of advances in deep learning and deep neural networks. It is mainly used for advanced applications in natural language processing. Google is using it to enhance its search engine results. OpenAI has used transformers to create its famous GPT-2 and GPT-3 models. Since its debut in 2017, the transformer architecture has evolved and branched out into many different variants, expanding beyond language…

This story continues at The Next Web

No comments:

Post a Comment