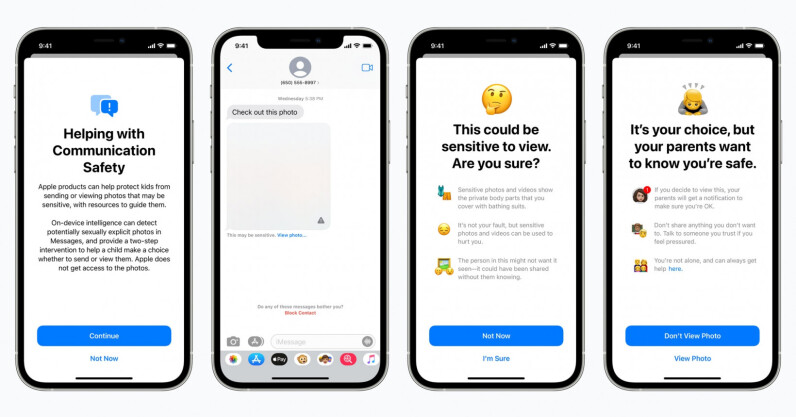

Last night, Apple made a huge announcement that it’ll be scanning iPhones in the US for Child Sexual Abuse Material (CSAM). As a part of this initiative, the company is partnering with the government and making changes to iCloud, iMessage, Siri, and Search. However, security experts are worried about surveillance and the risks of data leaks. Before taking a look at those concerns, let’s understand, what is Apple doing exactly? How does Apple plan to scan iPhones for CSAM images? A large set of features for CSAM scanning relies on fingerprinted images provided by the National Center for Missing and Exploited Children…

This story continues at The Next Web

Or just read more coverage about: Apple

No comments:

Post a Comment